Featured image: The Simplex Algorithm

Algorithms have become a hot topic of political lament in the last few years. The literature is expansive; Christopher Steiner’s upcoming book Automate This: How Algorithms Came to Rule Our World attempts to lift the lid on how human agency is largely helpless in the face of precise algorithmic bots that automate the majority of daily life and business. So too, is this matter being approached historically, with Chris Bishop and John MacCormick’s Nine Algorithms That Changed the Future, outlining the specific construction and broad use of these procedures (such as Google’s powerful PageRank algorithm, and others used in string searching, (i.e regular expressions, cryptography and compression, Quicksort for database management). The Fast Fourier Transform, first developed in 1965 by J.W Cooley & John Tukey, was designed to compute the much older mathematical discovery of the Discrete Fourier Algorithm,* and is perhaps the most widely used algorithm in digital communications, responsible for breaking down irregular signals into their pure sine-wave components. However, the point of this article is to critically analyse what the specific global dependences of algorithmic infrastructure are, and what they’re doing to the world.

A name which may spring forth in most people’s minds is the former employee of Zynga, founder of social games company Area/Code and self described ‘entrepreneur, provocateur, raconteur’ Kevin Slavin. In his famously scary TED talk, Slavin outlined the lengths Wall Street Traders were prepared to go in order to construct faster and more efficient algo-trading transactions: such as Spead Networks building an 825 mile, ‘one signal’ trench between NYC and Chicago or gutting entire NYC apartments, strategically positioned to install heavy duty server farms. All of this effort, labelled as ‘investment’ for the sole purpose of transmitting a deal-closing, revenue building algorithm which can be executed 3 – 5 microseconds faster than all the other competitors.

A subset of this, are purposely designed algorithms which make speedy micro-profits from large volumes of trades, otherwise known as ‘high speed or high frequency traders (HST). Such trading times can be divided into billionths of a second on a mass scale, with the ultimate goal of making trades before any possible awareness from rival systems. Other sets of trading rely on unspeakably complicated mathematical formulas to trade on brief movements in the relationship between security risks. With little to no regulation (as you would expect), the manipulation of stock prices is an already rampant activity.

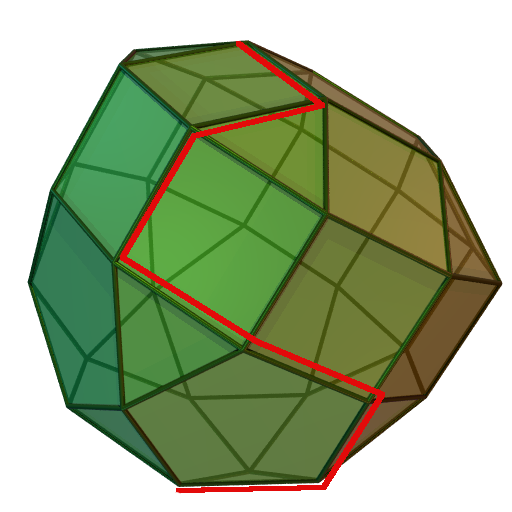

The Simplex Algorithm, originally developed by George Dantzig in the late 1940s, is widely responsible for solving large scale optimisation problems in big business and (according the optimisation specialist Jacek Gondzio) it runs at “tens, probably hundreds of thousands of calls every minute“. With its origins in multidimensional geometry space, the Simplex’s methodological function arrives at optimal solutions for maximum profit or orienting extensive distribution networks through constraints. It’s a truism in certain circles to suggest that almost all corporate and commerical CPU’s are executing Dantzig’s Simplex algorithm, which determines almost everything from work schedules, food prices, bus timetables and trade shares.

But on a more basic level, within the supposedly casual and passive act of browsing information online, algorithms are constructing more and more of our typical experiences on the Web. Moreover they are constructing and deciding what content we browse for. A couple of weeks ago John Naughton wrote a rather Foucaultian piece for the Guardian online, commenting on the multitude of algorithmic methods which secretly shape our behaviour. It’s the usual rhetoric, with Naughton treating algorithms as if they silently operate in secret, through the screens in global board rooms, and the shadowy corners of back offices dictating the direction of our world – x-files style.

‘They have probably already influenced your Christmas shopping, for example. They have certainly determined how your pension fund is doing, and whether your application for a mortgage has been successful. And one day they may effectively determine how you vote.’

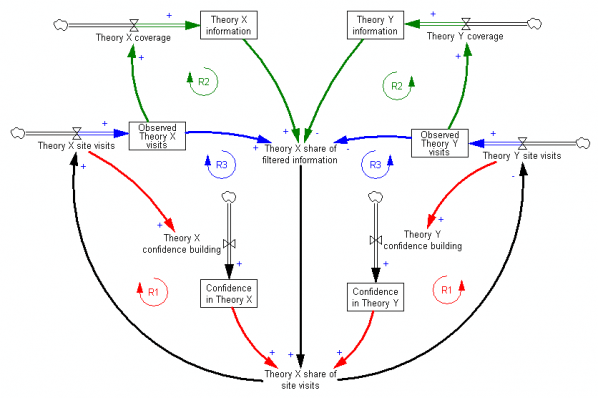

The political abuse here is retained in the productive means of generating information and controlling human consumption. Naugnton cites an article last month by Nick Diakopoulos who warns that not only are online news environments saturated with generative algorithms, but they also reveal themselves to be biased, masquerading as ‘objective’. The main flaw in this being ‘Summerisation‘; that relatively naive decision criteria, inputted into a functional algorithm (no matter how well-designed and well intentioned) can process biased outputs that exclude and prioritise certain political, racial or ethical views. In an another (yet separate) TED talk, Eli Pariser makes similar comments about so-called “filter bubbles”; unintended consequences of personal editing systems which narrow news search results, because high developed algorithms interpret your historical actions and specifically ‘tailor’ the results. Presumably its for liberal self-improvement, unless one mistakes self-improvement with technocratic solipsism.

Earlier this year, Nextag CEO Jeffery Katz wrote a hefty polemic against the corporate power of Google’s biased Pagerank algorithm, expressing doubt about its capability to objectively search for other companies aside from its own partners. This was echoed in James Grimmelmann’s essay, ‘Some Skepticism About Search Neutrality’, for the collection The Next Digital Decade. Grimmelmann gives a heavily detailed exposition on Google’s own ‘net neutrality’ algorithms and how biased they happen to be. In short, Pagerank doesn’t simply decide relevant results, it decides visitor numbers and he concluded on this note.

‘With disturbing frequency, though, websites are not users’ friends. Sometimes they are, but often, the websites want visitors, and will be willing to do what it takes to grab them.’

But lets think about this; its not as if on a formal, computational level, anything has changed. Algorithmic step by step procedures are mathematically speaking as old as Euclid. Very old. Indeed, reading this article wouldn’t even be possible without two algorithms in particular: the Universal Turing Machine, the theoretical template for programming which is sophisticated enough to mimic all other Turing Machines, and the 1957 Fortran Compiler; the first complete algorithm to convert source code in executable machine code. The pioneering algorithm responsible for early languages such as COBOL.

Moreover, its not as if computation itself has become more powerful, rather it has been given a larger, expansive platform to operate in. The logic of computation, the formalisation of algorithms, or, the ‘secret sauce’, (as Naughton whimsically puts it) have simply fulfilled their general purpose, which is to say they have become purposely generalised, in most, if not all corners of Western production. As Cory Doctorow put in 2011’s 28c3 and throughout last year, ‘We don’t have cars anymore, we have computers we ride in; we don’t have airplanes anymore, we have flying Solaris boxes with a big bucketful of SCADA controllers.’ Any impact on one corner of computational use affects another type of similar automation.

Algorithms in-themselves then, haven’t really changed, they have simply expanded their automation. Securing, compressing, trading, sharing, writing, exploiting. Even machine-learning, a name which infers myths of self-awareness and intelligence are only created to make lives easier through automation and function.

The fears surrounding their control are an expansion of this automated formalisation, not something remarkably different in kind. It was inevitable that in a capitalist system, effective procedures which produce revenue would be increasingly automated. So one should make the case that the controlling aspect of algorithmic behaviour be tracked within this expansion (which is not to say that computational procedures are inherently capitalist). To understand algorithmic control is to understand what the formal structure of algorithms are and how they are used to construct controlling environments. Before one can inspect how algorithms are changing daily life, and environmental space, it is helpful to understand what algorithms are, and on a formal level, how they both work and don’t work.

The controlling, effective and structuring ‘power’ of algorithms, are simply a product of two main elements intrinsic to formal structure of the algorithm itself as originally presupposed by mathematics: these two elements are Automation and Decision. If it is to be built for an effective purpose, (capitalist or otherwise) an algorithm must simultaneously do both.

For Automation purposes, the algorithm must be converted from a theoretical procedure into an equivalent automated mechanical ‘effective’ procedure (inadvertently this is an accurate description of the Church-Turing thesis, a conjecture which formulated the initial beginnings of computing in its mathematical definition).

Although it is sometimes passed over as obvious, algorithms are also designed for decisional purposes. Algorithms must also be programmed to ‘decide’ on a particular user input or decide on what is the best optimal result from a set of possible alternatives. The algorithm has to be programmed to decide on the difference between a query which is ‘profitable’ or ‘loss-making’, or a set of shares which are ‘secure’ or ‘insecure’, or deciding the optimal path amongst millions of variables and constraints, or locating various differences between ‘feed for common interest’ and ‘feed for corporate interest’. When any discussion arises on the predictive nature of algorithms, it operates on the suggestion that it can decide an answer or reach the end of its calculation.

Code both elements together consistently and you have an optimal algorithm which functions effectively automating the original decision as directed by the individual or company in question. This is what can be typically denoted as ‘control’ – determined action at a distance. But that doesn’t mean that an algorithm suddenly emerges with both elements from the start, they are not the same thing, although they are usually mistaken to be: negotiations must arise according to which elements are to be automated and which are to be decided.

But code both elements or either element inconsistently and you have a buggy algorithm, no matter what controlling functionality it’s used for. If it is automated, but can’t ultimately decide on what is profit or loss, havoc ensues. If it can decide on optimised answers, but can’t be automated effectively, then its accuracy and speed is only as good as those controlling it, making the algorithm’s automation ineffective, unreliable, or only as good as human supervision.

“Algorithmic control” then, is a dual product of getting these two elements to work, and my suggestion here is any resistance to that control comes from separating the two, or at least understanding and exploiting the pragmatic difficulties of getting the two to work. So looking at both elements separately (and very quickly), there are two conflicting political issues going on and thus two opposing mixtures of control and non-control;

Firstly there is the privileging of automation in algorithmic control. This, as Slavin asserts, examines algorithms as unreadable “co-evolutionary forces” which one must understand alongside nature and man. The danger that faces us consists in blindly following the automated whims of algorithms no matter what they decide or calculate. Decision-making is limited to the speed of automation. This view is one of surrendering calculation and opting for speed and blindness. These algorithms operate as perverse capitalist effective procedures, supposedly generating revenue and exploiting users on their own well enough (and better than any human procedure), the role of their creators and co-informants is a role best suited to improving the algorithm’s conditions for automation or increasing the speed to calculate.

Relative to the autonomous “nature” of algorithms, humans are likely to leave them unchecked and unsupervised, and in turn they lead to damaging technical glitches which inevitably cause certain fallouts, such as the infamous “Flash Crash” loss and regain on May 6th 2010 (its worrying to note that two years on, hardly anyone knows exactly why this happened, precisely insofar no answer was decided). The control established in automation can flip into an unspeakable mode of being out of control, or being subject to the control of an automaton, the consequences of which can’t be fully anticipated until it ruins the algorithm’s ability to decide an answer. The environment is now subject to its efficiency and speed.

But there is also a contradictory political issue concerning the privileging of decidability in algorithmic control. This as Naughton and Katz suggest, is located in the closed elements of algorithmic decision and function. Algorithms built to specifically decide results which only favour and benefit the ruling elite who have built them for specific effective purposes. These algorithms not only shape the way content is structured, they also shape the access of online content itself, determining consumer understanding and its means of production.

This control occurs in the aforementioned Simplex Algorithm, the formal properties which decide nearly all commercial and business optimising; from how best to roster staff in supermarkets, to deciding how much finite machine resources can be used in server farms. Its control is global, yet it too faces a problem of control in that its automation is limited by its decision-making. Thanks to a mathematical conjecture originating with US mathematician Warren Hirsch, there is no developed method for finding a more effective algorithm, causing serious future consequences for maximising profit and minimising cost. In other words, the primary of decidability reaches a point where it’s automation is struggling to support the real world it has created. The algorithm is now subject to the working environment’s appetite for efficiency and speed.

This is the opposite of privileging automation – the environment isn’t reconstructed to speed up the algorithm automation-capabilities irrespective of answers, rather the algorithm’s limited decision-capabilities are subject to the environment which now desires solutions and increased answers. If the modern world cannot find an algorithm which decides more efficiently, modern life reaches a decisive limit. Automation becomes limited.

——————–

These are two contradictory types of control; once one is privileged, the other recedes from view. Code an algorithm to automate at speed, but risk automating undecidable, meaningless, gibberish output, or, code an algorithm to decide results completely, but risk the failure to be optimally autonomous. In both cases, the human dependency on either automation or decision crumbles leading to unintended disorder. The whole issue does not lead to any easy answers, instead it leads to a tense, antagonistic network of algorithmic actions struggling to fully automate or decide, never entirely obeying the power of control. Contrary to the usual understanding, algorithms aren’t monolithic, characterless beings of generic function, to which humans adapt and adopt, but complex, fractured systems to be negotiated and traversed.

In between these two political issues lies our current, putrid situation as far as the expansion of computation is concerned – a situation in which computational artists have more to say about it than perhaps they think they do. Their role is more than mere commentary, but a mode of traversal. Such an aesthetics has the ability to roam the effects of automation and decision, examining their actions even while they are, in turn, determined by them.

* With special thanks to the artist and writer Paul Brown, whom pointed out the longer history of the FFT to me.