For its closing community gathering of the year, the Disruption Network Lab organised a conference to extend and connect its 2019 programme ‘The Art of Exposing Injustice’ – with social and cultural initiatives, fostering direct participation and enhancing engagement around the topics discussed throughout the year. Transparency International Deutschland, Syrian Archive, and Radical Networks are some of the organisations and communities that have taken part on DNL activities and were directly involved in this conference on November the 30th, entitled ‘Activation: Collective Strategies to Expose Injustice’ on anti-corruption, algorithmic discrimination, systems of power, and injustice – a culmination of the meet-up programme that ran parallel to the three conferences of 2019.

The day opened with the talk ‘Untangling Complexity: Working on Anti-Corruption from the International to the Local Level,’ a conversation with Max Heywood, global outreach and advocacy coordinator for Transparency International, and Stephan Ohme, lawyer and financial expert from Transparency International Deutschland.

In the conference ‘Dark Havens: Confronting Hidden Money & Power’ (April 2019) – DNL focused its work on offshore financial systems and global networks of international corruption involving not only secretive tax havens, but also financial institutions, systems of law, governments and corporations. On the occasion, DNL hosted discussions about the Panama Papers and other relevant leaks that exposed hundreds of cases involving tax evasion, through offshore regimes. With the contribution of whistleblowers and people involved in investigations, the panels unearthed how EU institutions turn a blind eye to billions of Euros worth of wealth that disappears, not always out of sight of local tax authorities, and on how – despite, the global outrage caused by investigations and leaks – the practice of billionaires and corporations stashing their cash in tax havens is still very common.

Introducing the talk ‘Untangling Complexity,’ Disruption Network community director Lieke Ploeger asked the two members of Transparency International and its local chapter Transparency International Deutschland to touch base after a year-long cooperation with the Lab, in which they have been substantiating how, in order to expose and defeat corruption, it is necessary to make complexity transparent and simple. With chapters in more than 100 countries and an international secretariat in Berlin, Transparency International works on anti-corruption at an international and local level through a participated global activity, which is the only effective way to untangle the complexity of the hidden mechanisms of international tax evasion and corruption.

Such crimes are very difficult to detect and, as Heywood explained, transparency is too often interpreted as simple availability of documents and information. It requires instead a higher degree of participation since documents and information must be made comprehensible, singularly and in their connections. In many cases, corruption and illegal financial activities are shielded behind technicalities and solid legal bases that make them hard to be uncovered. Within complicated administrative structures, among millions of documents and terabytes of files, an investigator is asked to find evidence of wrongdoings, corruption, or tax evasion. Most of the work is about the capability to put dots together, managing to combine data and metadata to define a hidden structure of power and corruption. Like in a big puzzle, all pieces are connected. But those pieces are often so many, that just a collective effort can allow scrutiny. That is why a law that allows transparency in Berlin, on estate properties and private funds, for example, might be able to help in a case of corruption somewhere else in the world. Exactly like in the financial systems, also in anti-corruption, nothing is just local and the cooperation of more actors is essential to achieve results.

The recent case of the Country-by-Country Reporting shows the situation in Europe. It was an initiative proposed in the ‘Action Plan for Fair and Efficient Corporate Taxation‘ by the European Commission in 2015. It aimed at amending the existing legislation to require multinational companies to publicly disclose their income tax in each EU member state they work in. Not many details are supposed to be disclosed and the proposal is limited only to companies with a turnover of at least €750 million, to know how much profit they generate and how much tax they pay in each of the 28 countries. However, many are still reluctant to agree, especially those favouring the profit-shifting within the EU. Some, including Germany, worry that revealing companies’ tax and profit information publicly will give a competitive advantage to companies outside Europe that don’t have to report such information. Twelve countries voted against the new rules, all member states with low-tax environments helping to shelter the profits of the world’s biggest companies. Luxembourg is one of them. According to the International Monetary Fund – through its 600,000 citizens – the country hosts as much foreign direct investment as the USA, raising the suspicion that most of this flow goes to “empty corporate shells” designed to reduce tax liabilities in other EU countries.

Moreover, in every EU country, there are voices from the industrial establishment against this proposal. In Germany, the Foundation of Family Businesses, which despite its name guarantees the interests of big companies, as Ohme remarked, claims that enterprises are already subject to increasingly stronger social control through the continuously growing number of disclosure requirements. It complains about what is considered the negative consequences of public Country-by-Country Reporting for their businesses, stating that member states should deny their consent as it would considerably damage companies’ competitiveness, and turn the EU into a nanny state. But, apart from the expectations and the lobbying activities of the industrial élite, European citizens want multinational corporations to pay fair taxes on EU soil where the money is generated. The current fiscal regimes increase disparities, allow profit-shifting and bank secrecy. The result is that most of the fiscal burden push against less mobile tax-payers, retirees, employees, and consumers, whilst corporations and billionaires get away with their misconducts.

Transparency International encourages citizens all over the globe to carry on asking for accountability and improvements in their financial and fiscal systems without giving up. In 1997, the German government made bribes paid to foreign officials by German companies tax-deductible, and until February 1999 German companies were allowed to bribe in order to do business across the border, which was common practice, particularly in Asia and Latin America since at least the early 70s. But things have changed. Ohme is aware of the many daily scandals related to corruption and tax evasion: for this reason he considers the work of Transparency International necessary. However, he invited his audience not to describe it as a radical organisation, but as an independent one that operates on the basis of research and objective investigations.

In the last months of 2019 in Germany, the so-called Cum-Ex scandal caught the attention of international news outlets as investigators discovered a trading scheme exploiting a tax loophole on dividend payments within the German tax code. Authorities allege bankers helped investors reap billions of euros in illegitimate tax refunds, as Cum-Ex deals involved a trader borrowing a block of shares to bet against them, and then selling them on to another investor. In the end, parties on both sides of the trade could claim a refund of withholding taxes paid on the dividend, even though prosecutors contend that only a single rebate was actually due. The loophole was closed in 2012, but investigators think that in the meantime companies like Freshfields advised many banks and other participants in the financial markets to illegally profit from it.

As both Heywood and Ohme stressed, we need measures that guarantee open access to relevant information, such as the beneficial owners of assets which are held by entities, and arrangements like shell companies and trusts – that is to say, the info about individuals who ultimately control or profit from a company or estate. Experts indicate that registers of beneficial owners help authorities prosecute criminals, recover stolen assets, and deter new ones; they make it harder to hide connections to illicit flows of capital out of a national budget.

Referring to the case of the last package of measures regarding money laundering and financial transparency, under approval by the German parliament, Ohme showed a shy appreciation for the improvements, as real estate agents, gold merchants, and auction houses will be subject to tighter regulations in the future. Lawmakers complained that the US embassy and Apple tried to quash part of these new rules and that during the parliamentary debate they sought to intervene with the Chancellery to prevent a section of the law from being adopted. The attempt was related to a regulation which forces digital platforms to open their interfaces for payment services and apps, such as the payment platform ApplePay, but it did not land. Apple’s behaviour is a sign of the continuous interferences of the interests at stake when these topics are discussed.

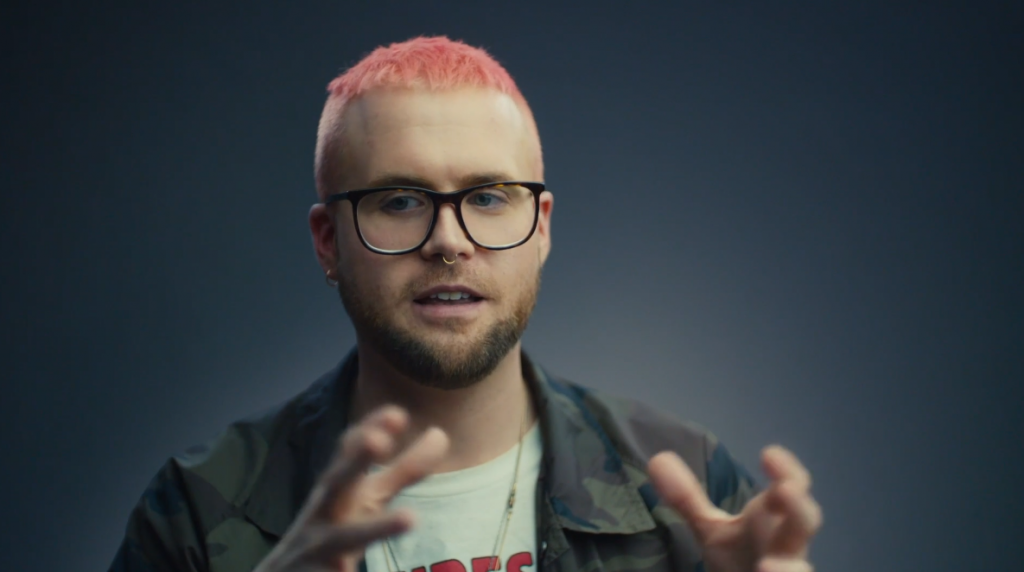

At the end of the first talk, DNL hosted a screening of the documentary ‘Pink Hair Whistleblower’ by Marc Silver. It is an interview with Christopher Wylie, who worked for the British consulting firm Cambridge Analytica, who revealed how it was built as a system that could profile individual US voters in 2014, to target them with personalised political advertisements and influence the results of the elections. At the time, the company was owned by the hedge fund billionaire Robert Mercer and headed by Donald Trump’s key advisor, and architect of a far-right network of political influence, Steve Bannon.

The DNL discussed this subject widely within the conference ‘Hate News: Manipulators, Trolls & Influencers’ (May 2018), trying to define the ways of pervasive, hyper-individualized, corporate-based, and illegal harvesting of personal data – at times developed in partnership with governments – through smartphones, computers, virtual assistants, social media, and online platforms, which could inform almost every aspect of social and political interactions.

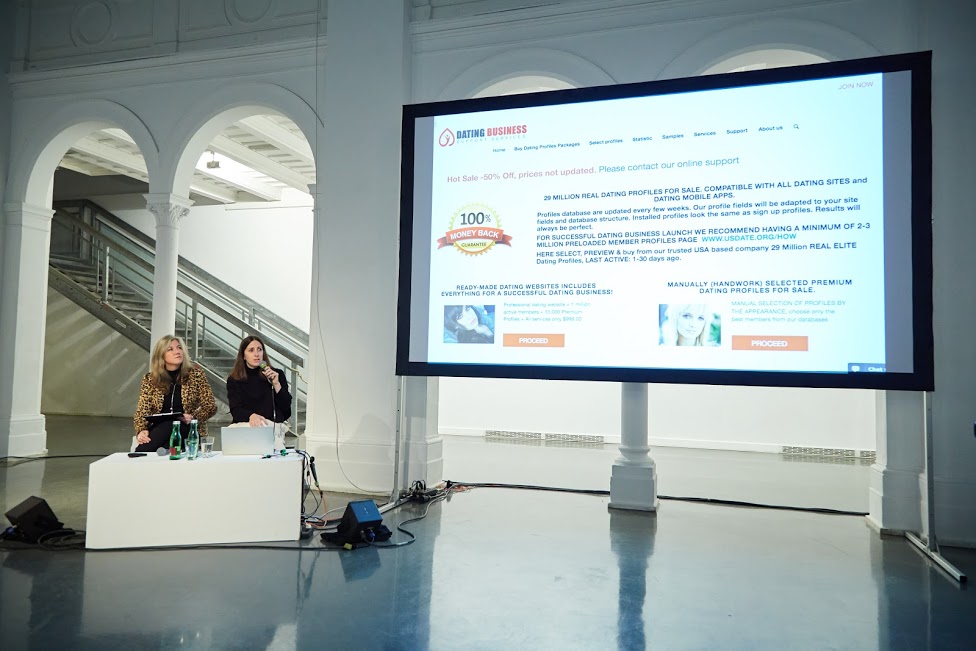

With the overall theme ‘AI Traps: Automating Discrimination‘ (June 2019), DNL sought to define how artificial intelligence and algorithms reinforce prejudices and biases in society. These same issues were raised in the Activation conference, in the talk ‘An Autopsy of Online Love, Labour, Surveillance and Electricity/Energy.’ Joana Moll, artist and researcher, in conversation with DNL founder Tatiana Bazzichelli, presented her latest projects ’The Dating Brokers’ and ‘The Hidden Life of an Amazon User,’ on the hidden side of IT-interface and data harvesting.

The artist’s work moves from the challenges of the so-called networked society to a critique of social and economic practices of exploitation, which focuses on what stands behind the interface of technology and IT services, giving a visual representation of what is hidden. The fact that users do not see what happens behind the online services they use has weakened the ability that individuals and collectives have to define and protect their privacy and self-determination, getting stuck in traps built to get the best out of their conscious or unconscious contribution. Moll explains that, although most people’s daily transactions are carried out through electronic devices, we know very little of the activities that come with and beyond the interface we see and interact with. We do not know how the machine is built, and we are mostly not in control of its activities.

Her project ‘The Dating Brokers’ focuses on the current practices in the global online dating ecosystem, which are crucial to its business model but mostly opaque to its users. In 2017, Moll purchased 1 million online dating profiles from the website USDate, a US company that buys and sells profiles from all over the world. For €136, she obtained almost 5 million pictures, usernames, email addresses, details about gender, age, nationality, and personal information such as sexual orientation, private interests, profession, physical characteristics, and personality. Analysing few profiles and looking for matches online, the artist was able to define a vast network of companies and dating platforms capitalising on private information without the consent of their users. The project is a warning about the dangers of placing blind faith in big companies and raises alarming ethical and legal questions which urgently need to be addressed, as dating profiles contain intimate information on users and the exploitation and misuse of this data can have dramatic effects on their lives.

With the ongoing project ‘The Hidden Life of an Amazon User,’ Moll attempts to define the hidden side of interfaces. The artist documented what happens in the background during a simple order on the platform Amazon. Purchasing the book ‘The Life, Lessons & Rules for Success’ by Amazon founder Jeff Bezos her computer was loaded with so many scripts and requests, that she could trace almost 9,000 pages of lines of code as a result of the order and more than 87 megabytes of data running in the background of the interface. A large part of the scripts are JavaScript files, that can theoretically be employed to collect information, but it is not possible to have any idea of what each of these commands meant.

With this project, Moll describes the hidden aspects of a business model built on the monitoring and profiling of customers that encourages them to share more details, spend more time online, and make more purchases. Amazon and many other companies aggressively exploit their users as a core part of their marketing activity. Whilst buying something, users provide clicks and data for free and guarantee free labour, whose energy costs are not on the companies’ bills. Customers navigate through the user interface, as content and windows constantly load into the browser to enable interactions and record user’s activities. Every single click is tracked and monetized by Amazon, and the company can freely exploit external free resources, making a profit out of them.

The artist warns that these hidden activities of surveillance and profiling are constantly contributing to the release of CO2. This due to fact that a massive amount of energy is required to load the scripts on the users’ machine. Moll followed just the basic steps necessary to get to the end of the online order and buy the book. More clicks could obviously generate much more background activity. A further environmental cost that customers of these platforms cannot decide to stop. This aspect shall be considered for its broader and long term implications too. Scientists predict that by 2025 the information and communications technology sector might use 20 per cent of all the world’s electricity, and consequently cause up to 5.5 per cent of global carbon emissions.

Moll concluded by saying we can hope that more and more individuals will decide to avoid certain online services and live in a more sustainable way. But, trends show how a vast majority of people using these platforms and online services, are harmful, because of their hidden mechanisms, affecting people’s lives, causing environmental and socio-economic consequences. Moll suggested that these topics should be approached at the community level to find political solutions and countermeasures.

The 17th conference of the Disruption Network Lab, ‘Citizens of Evidence’ (September 2019,) was meant to explore the investigative impact of grassroots communities and citizens engaged to expose injustice, corruption, and power asymmetries. Citizen investigations use publicly available data and sources to autonomously verify facts. More and more often ordinary people and journalists work together to provide a counter-narrative to the deliberate disinformation spread by news outlets of political influence, corporations, and dark money think-tanks. In this Activation conference, in a talk moderated by Nada Bakr, the DNL project and community manager, Hadi Al Khatib, founder and Director of ’The Syrian Archive’, and artist and filmmaker Jasmina Metwaly, altogether focused on the role of open archives in the collaborative production of social justice.

The Panel ‘Archives of Evidence: Archives as Collective Memory and Source of Evidence’ opened with Jasmina Metwaly, member of Mosireen, a media activist collective that came together to document and spread images of the Egyptian Revolution of 2011. During and after the revolution, the group produced and published over 250 videos online, focusing on street politics, state violence, and labour rights; reaching millions of viewers on YouTube and other platforms. Mosireen, who in Arabic recalls a pun of the words “Egypt” and “Determination” which could be translated as “we are determined,” has been working since its birth on collective strategies to allow participation and channel the energies and pulses of the 2011 protesters into a constructive discourse necessary to keep on fighting. The Mosireen activists organised street screenings, educational workshops, production facilities, and campaigns to raise awareness on the importance of archives in the collaborative production of social justice.

In January 2011, the wind of the Tunisian Revolution reached Egyptians, who gathered in the streets to overthrow the dictatorial system. In the central Tahrir Square in Cairo, for more than three weeks, people had been occupying public spaces in a determined and peaceful protest to get social and political change in the sense of democracy and human rights enhancement.

For 5 years, since 2013, the collective has put together the platform ‘858: An Archive of Resistance’ – an archive containing 858 hours of video material from 2011, where footage is collected, annotated, and cross-indexed to be consulted. It was released on 16th January 2018, seven years after the Egyptian protests began. The material is time-stamped and published without linear narrative, and it is hosted on Pandora, an open-source tool accessible to everybody.

The documentation gives a vivid representation of the events. There are historical moments recorded at the same time from different perspectives by dozens of different cameras; there are videos of people expressing their hopes and dreams whilst occupying the square or demonstrating; there is footage of human rights violations and video sequences of military attacks on demonstrators.

In the last six years, the narrative about the 2011 Egyptian revolution has been polluted by revisionisms, mostly propaganda for the government and other parties for the purposes of appropriation. In the meantime, Mosireen was working on the original videos from the revolution, conscious of the increasing urgency of such a task. Memory is subversive and can become a tool of resistance, as the archive preserves the voices of those who were on the streets animating those historical days.

Thousands of different points of views united compose a collection of visual evidence that can play a role in preserving a memory of events. The archive is studied inside universities and several videos have been used for research on the types of weapons used by the military and the police. But what is important is that people who took part in the revolution are thankful for its existence. The archive appears as one of the available strategies to preserve people’s own narratives of the revolution and its memories, making it impermeable to manipulations. In those days and in the following months, Egypt’s public spaces were places of political ferment, cultural vitality, and action for citizens and activists. The masses were filled with creativity and rebellion. But that identity is at risk to disappear. That kind of participation and of filming is not possible anymore; public spaces are besieged. The archive cannot be just about preserving and inspiring. The collective is now looking for more videos and is determined to carry on its work of providing a counter-narrative on Egyptian domestic and international affairs, despite tightened surveillance, censorship, and hundreds of websites blocked by the government.

There are many initiatives aiming to resist forgetting facts and silencing independent voices. In 2019, the Disruption Network Lab worked on this with Hadi Al Khatib, founder and director of ‘The Syrian Archive,’ who intervened in this panel within the Activation conference. Since 2011, Al Khatib has been working on collecting, verifying, and investigating citizen-generated data as evidence of human rights violations committed by all sides in the Syrian conflict. The Syrian Archive is an open-source platform that collects, curates, and verifies visual documentation of human rights violations in Syria – preserving data as a digital memory. The archive is a means to establish a verified database of facts and represents a tool to collect evidence and objective information to put an order within the ecosystem of misinformation and the injustices of the Syrian conflict. It also includes a database of metadata information to contextualise videos, audios, pictures, and documents.

Such a project can play a central role in defining responsibilities, violations, and misconducts, and could contribute to eventual post-conflict juridical processes since the archive’s structure and methodology is supposed to meet international standards. The Syrian conflict is a bloody reality involving international actors and interests which is far from being over. International reports in 2019 indicate at least 871 attacks on vital civilian facilities with the deaths of 3,364 civilians, where one in four were children.

The platform makes sure that journalists and lawyers are able to use the verified data for their investigations and criminal case building. The work on the videos is based on meticulous attention to details, and comparisons with official sources and publicly available materials such as photos, footage, and press releases disseminated online.

The Syrian activist and archivist explained that a lot of important documents could be found on external platforms, like YouTube, that censor and erase content using AI under pressures to remove “extremist content,” purging vital human rights evidence. Social media has been recently criticized for acting too slowly when killers live-stream mass shootings, or when they allow extremist propaganda within their platforms.

DNL already focused on the consequences of automated removal, which in 2017 deleted 10 per cent of the archives documenting violence in Syria, as artificial intelligence detects and removes content – but an automated filter can’t tell the difference between ISIS propaganda and a video documenting government atrocities. The Google-owned company has already erased 200,000 videos with documental and historical relevance. In countries at war, the evidence captured on smartphones can provide a path to justice, but AI systems often mark them as inadequate violent content which consequently erases them.

Al Khatib launched a campaign to warn platforms to fix and improve their content moderation systems used to police extremist content, and to consider when they define their measures to fight misinformation and crimes, aspects like the preservation of the common memory on relevant events. Twitter, for example, has just announced a plan to remove accounts which have been inactive for six months or longer. As Al Khatib explains, this could result in a significant loss to the memory of the Syrian conflict and of other war zones, and cause the loss of evidence that could be used in justice and accountability processes. There are users who have died, are detained, or have lost access to their accounts on which they used to share relevant documents and testimonies.

In the last year, the Syrian Archive platform was replicated for Yemen and Sudan to support human rights advocates and citizen journalists in their efforts to document human rights violations, developing new tools to increase the quality of political activism, future prosecutions, human rights reporting and research. In addition to this, the Syrian Archive often organises workshops to present its research and analyses, such as the one in October within the Disruption Network Lab community programme.

The DNL often focuses on how new technologies can advance or restrict human rights, sometimes offering both possibilities at once. For example, free open technologies can significantly enhance freedom of expression by opening up communication options; they can assist vulnerable groups by enabling new ways of documenting and communicating human rights abuses. At the same time, hate speech can be more readily disseminated, technologies for surveillance purposes are employed without appropriate safeguards and impinge unreasonably on the privacy of individuals; infrastructures and online platforms can be controlled to chase and discredit minorities and free speakers. The last panel discussion closing the conference was entitled ‘Algorithmic Bias: AI Traps and Possible Escapes’, moderated by Ruth Catlow, who took the floor to introduce the two speakers and asked them to debate effective ways to define this issue and discuss possible solutions.

Ruth Catlow is co-founder and co-artistic director of Furtherfield, an art gallery in London’s Finsbury Park – home for artworks, labs, and debates based on playful collaborative art research experiences, always across distances and differences. Furtherfield diversifies the people involved in shaping emerging technologies through an arts-led approach, always looking at ways to disrupt network power of technology and culture, to engage with the urgent debates of our time and make these debates accessible, open, and participated. One of its latest projects focused on algorithmic food justice, environmental degradation, and species decline. Exploring how new algorithmic technologies could be used to create a fairer and more sustainable food system, Furtherfield worked on solutions in which culture comes before structures, and human organisation and human needs – or the needs of other living beings and living systems – are at the heart of design for technological systems.

As Catlow recalled, in the conference ‘AI Traps: Automating Discrimination’ (June 2019), the Disruption Network Lab focused on the possible countermeasures to the AI-informed decision-making potential for racial bias and reinforced through AI decision-making tools. It was an inspiring and stimulating event on inclusion, education, and diversity in tech, highlighting how algorithms are not neutral and unbiased. On the contrary, they often reflect, reinforce, and automate the current and historical biases and inequalities of society, such as social, racial, and gender prejudices. The panel within the Activation conference framed these issues in the context of the work by the speakers, Caroline Sinders and Sarah Grant.

Sinders is a machine learning design researcher and artist. In her work, she focuses on the intersections of natural language processing, artificial intelligence, abuse, online harassment, and politics in digital and conversational spaces. She presented her last study on the Intersectional Feminist AI, focusing on labour and automated computer operations.

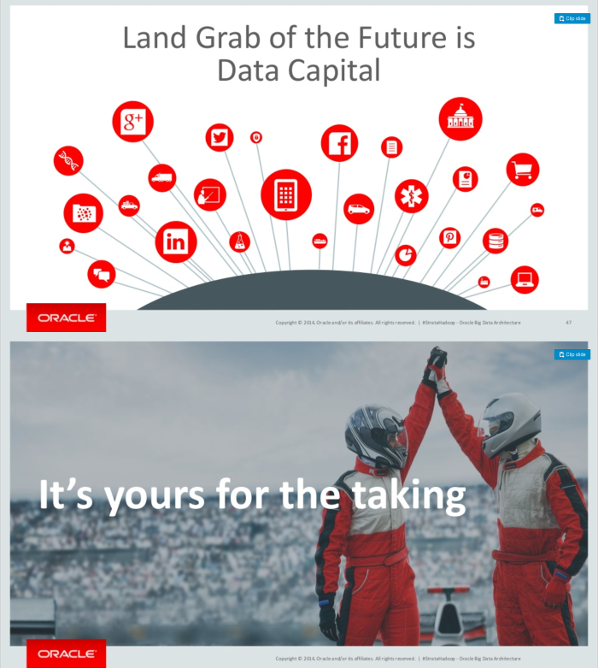

Quoting Hyman (2017), Sinders argued that the world is going through what some are calling a Second Machine Age, in which the re-organisation of people matters as much as, if not more than, the new machines. Employees receiving a regular wage or salary have begun to disappear, replaced by independent contractors and freelancers; remuneration is calculated on the basis of time worked, output, or piecework, and paid to employees for hours worked. Labour and social rights conquered with hard, bloody fights in the last two centuries seem to be irrelevant. More and more tasks are operated through AI, which plays a big role in the revenues of big corporations. But still, machine abilities are possible just with the fundamental contribution of human work.

Sinders begins her analyses considering that human labour has become hidden inside of automation, but is still integral to that. The training of machines is a process in which human hands touch almost every part of the pipeline, making decisions. However, people who train data models are underpaid and unseen inside of this process. As Thomas Thwaites’ toaster project, a critical design project in which the artist built a commercial toaster from scratch – melting iron and building circuits and creating a new plastic shell – Sinders analyses the Artificial Intelligence economy under the lens of feminist, intersectionalism, to define how and to which extent it is possible to create an AI that respects in all its steps the principles of non-exploitation, non-bias, and non-discrimination.

Her research considers the ‘Mechanical Turks’ model, in which machines masquerade as a fully automated robot but are operated by a human. Mechanical Turk is actually a platform run by Amazon, where people execute computer-like tasks for a few cents, synonymous with low-paid digital piecework. A recent research analysed nearly 4 million tasks on Mechanical Turk performed by almost 3,000 workers found that those workers earned a median wage of about $2 an hour, whilst only 4% of workers on Mechanical Turk earned more than $7,25 an hour. Since 2005 this platform has flourished. Mechanical Turks are used to train AI systems online. Even though it is mostly systematised factory jobs, this labour falls under the gig economy, so that people employed as Mechanical Turks are considered gig workers, who have no paid breaks, holidays, and guaranteed minimum wage.

Sinder concluded that an ethical, equitable, and feminist environment is not achievable within a process based on the competition among slave labourers that discourages unions, pays a few cents per repetitive task and creates nameless and hidden labour. Such a process shall be thoughtful and critical in order to guarantee the basis for equity; it must be open to feedback and interpretation, created for communities and as a reflection of those communities. To create a feminist AI, it is necessary to define labour, data collection, and data training systems, not just by asking how the algorithm was made, but investigating and questioning them from an ethical standpoint, for all steps of the pipeline.

In her talk Grant, founder of Radical Networks, a community event and art festival for critical investigations and creative experiments around networking technology, described the three main planes online users interact with, where injustices and disenfranchisement can occur.

The first one is the control plane, which refers to internet protocols. It is the plumbing, the infrastructure. The protocol is basically a set of rules which governs how two devices communicate with each other. It is not just a technical aspect, because a protocol is a political action which basically involves exerting control over a group of people. It can also mean making decisions for the benefit of a specific group of people, so the question is our protocols but our protocols political.

The Internet Engineering Task Force (IETF) is an open standards organisation, which develops and promotes voluntary Internet standards, in particular, the standards that comprise the Internet protocol suite (TCP/IP). It has no formal membership roster and all participants and managers are volunteers, though their activity within the organisation is often funded by their employers or sponsors. The IETF was initially supported by the US government, and since 1993 has been operating as a standards-development function under the international membership-based non-profit organisation Internet Society. The IETF is controlled by the Internet and Engineering Steering Group (IESG), a body that provides final technical review of the Internet standards and manages the day-to-day activity of the IETF, setting the standards and best practices for how to develop protocols. It receives appeals of the decisions of the working groups and makes the decision to progress documents in the standards track. As Grant explained, many of its members are currently employed for major corporations such as Google, Nokia, Cisco, Mozilla. Though they serve as individuals, this issues a conflict of interests and mines independence and autonomy. The founder of Radical Networks is pessimistic about the capability of for-profit companies to be trusted on these aspects.

The second plane is the user plane, where we find the users’ experience and the interface. Here two aspects come into play: the UX design (user experience design), and the UI (user interface design). UX is the presumed interaction model which defines the process a person will experience when using a product or a website, while the UI is the actual interface, the buttons, and different fields we see online. UX and UI are supposed to serve the end-user, but it is often not like this. The interface is actually optimized for getting users to act online in ways which are not in their best interest; the internet is full of so-called dark patterns designed to mislead or trick users to do things that they might not want.

These dark patterns are part of the weaponised design dominating the web, which wilfully allows for harm of users and is implemented by designers who are not aware of or concerned about the politics of digital infrastructure, often considering their work to be apolitical and just technical. In this sense, they think they can keep design for designers only, shutting out all the other components that constitute society and this is itself a political choice. Moreover, when we consider the relation between technology and aspects like privacy, self-determination, and freedom of expression we need to think of the international human rights framework, which was built to ensure that – as society changes – the fundamental dignity of individuals remain essential. In time, the framework has demonstrated to be plastically adaptable to changing external events and we are now asked to apply the existing standards to address the technological challenges that confront us. However, it is up to individual developers to decide how to implement protocols and software, for example, considering human rights standards by design, and such choices have a political connotation.

The third level is the access plane which is what controls how users actually get online. Here, Grant used Project loon as an example to describe the importance of owning the infrastructure. Project loon by Google is an activity of the Loon LLC, an Alphabet subsidiary working on providing Internet access to rural and remote areas, bringing connectivity and coverage after natural disasters with internet-beaming balloons. As the panellist explained, it is an altruistic gesture for vulnerable populations, but companies like Google and Facebook respond to the logic of profit and we know that controlling the connectivity of large groups of populations provide power and opportunities to make a profit. Corporations with data and profilisation at the core of their business models have come to dominate the markets; many see with suspicion the desire of big companies to provide Internet to those four billions of people that at the moment are not online.

As Catlow warned, we are running the risk that the Internet becomes equal to Facebook and Google. Whilst we need communities able to develop new skills and build infrastructures that are autonomous, like the wireless mesh networks that are designed so that small devices called ‘nodes’ – commonly placed on top of buildings or in windows – can send and receive data and a WIFI signal to one another without an Internet connection. The largest and oldest wireless mesh network is the Athens Wireless Metropolitan Network, or A.W.M.N., in Greece, but we also have other successful examples in Barcelona (Guifi.net) and Berlin (Freifunk Berlin). The goal is not just counterbalancing superpowers of telecommunications and corporations, but building consciousness, participation, and tools of resistance too.

The Activation conference gathered in the Berliner Künstlerhaus Bethanien, the community around the Disruption Network Lab, to share collective approaches and tools for activating social, political, and cultural change. It was a moment to meet collectives and individuals working on alternative ways of intervening in the social dynamics and discover ways to connect networks and activities to disrupt systems of control and injustice. Curated by Lieke Ploeger and Nada Bakr, this conference developed a shared vision grounded firmly in the belief that by embracing participation and supporting the independent work of open platforms as a tool to foster participation, social, economic, cultural, and environmental transparency, citizens around the world have enormous potential to implement justice and political change, to ensure inclusive, more sustainable and equitable societies, and more opportunities for all. To achieve this, it is necessary to strengthen the many existing initiatives within international networks, enlarging the cooperation of collectives and realities engaged on these challenges, to share experiences and good practices.

Information about the 18th Disruption Network Lab Conference, its speakers, and topics are available online:

https://www.disruptionlab.org/activation

To follow the Disruption Network Lab, sign up for its newsletter and get information about conferences, ongoing research, and latest projects. The next event is planned for March 2020.

The Disruption Network Lab is also on Twitter and Facebook.

All images courtesy of Disruption Network Lab

This year’s festival of “Mine, yours, ours”(Moje, tvoje, naše) puts emphasis on people who are behind the work performance of machines, literally as well as metaphorically, and without whom the machines cannot perform their work.

Automatization, computerization, robotization, mechanization. Recent studies point out that by 2030 as many as 800 million workers throughout the world will lose their jobs to robots. Predictions that already in six years time robots with artificial intelligence will have replaced more than half of the human workforce, and thereby leave 75 million people unemployed, and that the number of robots that perform work of which humans are capable would double from the current 29%, have flooded web portals.

Questioning the status of work conditioned by the relation between man and machines, and observed from the perspective of contemporary artists, was chosen as the theme of the festival “Mine, yours, ours” carried out by Drugo More, held from the 14th to the 16th of February at the building of Filodrammatica in Rijeka. The international festival “Mine, yours, ours” has for fourteen years in a row by the means of art questioned the topics related to the exchange of goods and knowledge, an exchange that today is conditioned by capital and consequently bereft of all the aspects of a gift economy. Exchange via gifts has been replaced by mechanical work whereby the worker is distanced from the very purpose of the work and thereby doomed to a feeling of purposelessness, while the final product and service remain dehumanized. In a world that is mongering fear about robots taking over human jobs, the curator of the exhibition and moderator of the symposium, Silvio Lorusso, decided to give a new perspective on the topic putting emphasis on the people behind the work of the machines, literally and metaphorically, without whom the machines cannot – work.

Like robots that perform work mechanically and lacking intrinsic motivation, people play the roles of robots through various forms of work. The sequence of actions that we observe on the internet are attributed to the work of machines, ignorant of the fact that human work, hidden on purpose, is behind all the exposed data. A human, according to research by artist Sebastian Schmieg, writes the descriptions of the photos that we find on the internet and we are deceiving ourselves thinking that the descriptions are the product of an automatized system. A group of crowd workers creates the database for recognition of pictures, while the neural network of artificial intelligence is shaped with the help of manual work by a collective of people who choose what the machines will see, and what is to remain unrecognized. The role of human work is crucial to the processes that enable self-driving cars. Researcher and designer Florian Alexander-Schmidt explained how the detailed localization of an object is possible exclusively due to a human workforce, not robots. Digital platforms tend to locate vehicles at a precision rate of 99% and this cannot be achieved by an algorithmic system. Alexander-Schmidt points out that the cheapest workers are employed at servicing the digital platforms, such as those from Venezuela, whose work is hidden behind the magical acronym “AI”.

Artificial intelligence operates thanks to the strength and precision of human intelligence, and workers are the slaves of a system that functions like a computer game, demanding that employees score more and thereby perpetuate a game in which, no matter how high they score, they lose. They lose because a cheap labor force, qualified via automatized training, secretly performs the work that consumers consider a product of automatization, and they remain at insecure workplaces whose existence persists due to risky capital and results in constant change of workplaces – virtual migrations. The market of the internet diminishes the global geography of honorary work, while humans play machines performing jobs exclusively for a salary, turning into an invisible, undefined (robotized) mass employed at unstable workplaces and conditioned by risky capital.

The work performed by humans is considered a product of the work of machines, while machines are often replaced by humans. Entering a relationship, developing a sense of connection, characteristic for the human species, is becoming one of the fields in which robots are developing. Chatrooms and platforms for looking for a partner more and more often include programmed robot profiles that engage in communication and emotional bonding with those who sign up, as reported by the artist Elisa Giardina Papa. Robots replace humans, but this change of identity isn’t recognized by the other human – instead, they see a potential partner. In that sense we can speak about a justified presentiment not only of a loss of workplaces and the current modus operandi of the labor market, but of changes in the way emotional relationships are built as well.

Changes in the development of artificial intelligence (AI), virtual reality (VR), robotics, quantum computers, the internet of things (IoT), 3D printing, nanotechnology, biotechnology and the car industry improve some aspects of human life, but place humanity before social, economic and geopolitical challenges. Sustainability of the internet is entirely dependent on humans since the spare time that humans are ready to use for posting content conditions the survival of web portals, video and music channels and social networks. Giardina Papa explained how the spare time of humans has become the time of unpaid work for the internet. Activities that spare time offers – relaxing, self-realization, healthy spontaneous sleep, have been replaced with the production of content for others and posting it on the internet. Creativity, productivity, self-realization are falling into oblivion. Creative work has been replaced by plain performance and we identify more and more often with the byword which the artist Giardina Papa brought up as an example and are becoming “doers, not dreamers”.

Such an approach to life and work affects everybody, and is consciously looked on as a problem by artists in their attempts at a good-quality creative life. The average artists spend their days trapped doing jobs of stocking up shelves in stores, taking in orders, working for telecommunications operators and only at night devote themselves to acting, dancing, painting. Constant financial instability, insecure and temporary jobs, low income, unregistered work and an absence of pension are the conditions of life that the contemporary artist faces. A few European countries are an exception from this. Denmark awards 275 artists an annual scholarship that amounts to between 15000 and 149000 Danish krones. France made sure that every region secures a certain amount of financial support for artists and therefore in for example the region of Ile-de-France are recipients of up to 7628,47 euros for the organization of their workshops. Unfortunately, in most countries artists without rich parents or benefactors can only survive by performing daily work. While spare time is subordinate to work, work is becoming more and more precarious, per hour, without contracts, while full day work time is becoming the lifestyle of the millenium. A way of life where spare time is spent working, subordinated to a mechanical execution of tasks that don’t foster the self-development of the worker, makes man function in a way characteristic of machines.

The individual aspect exposes it’s intimacy in front of a collective audience and thereby diminishes it’s own independence. Simultaneously, via the intrusion of the private into the public, the collective character of the public now becomes more individualized, more personal and strives to be unique. Faced with changes artists are trying out new ways of expressing themselves as a means of enjoying their work. The joy in their work and their artworks stems from the recognition that their activities are actually creative and not plain performance, that is, mass-producing content for others and suitable to others, and such an approach seldom comes to anyone’s (robotized/artistic) mind anymore. Artists are the ones who in times of computerization of work tend to enjoy their work and work in a way to develop oneself.

We are witnessing processes where work is developing via control of people (crowdsourcing), it is transforming and making people similar to machines, and machines to people and making people’s work the work of machines and vice versa. Man is faced with new challenges at the workplace, and with the focus on securing existential necessities as well as necessities conditioned by the contemporary approach to existence, there is no spare time to devote to questioning the position to which he brought himself to. Artists, equally affected by the changes, succumb to them, but also analyze them, deautomatize them and actively do research on them. The international guests to the festival “Mine, yours, ours” have through their works pulled back the curtain to make the supposedly automatized work transparent for people to see that a well hidden group of humans is behind this work. While explaining the results of their research, preceding the artistic exhibition, at a two-day symposium, they gave the public new perspectives on the theme, but also further corroborated the existing view. Where does the robot begin and where does man end and who or what is to whom or to what a robot, and who or what to whom or to what a human are the questions that, concerning the labor market, pave the way for – a new set of sub-questions.

Jelena Uher

Economic theory states that technological change comes in waves: one innovation rapidly triggers another, launching the disruptions from which new industries, workplaces and jobs are born. Steam power set in motion the industrial revolution, and likewise since the 1990s a torrent of digital and software developments have transformed industries and our working lives. But the revolutionary very quickly becomes humdrum, and once-radical and efficient innovations like the telephone, email, smartphones and Skype, become part of everyday, even mundane experience. Despite all the time-saving devices we have successfully integrated into our lives, there is a collective anxiety about the current wave of technological change and what more the future holds. Mainstream dystopian visions of our relationship with technology abound, but are we in fact engaged in a group act of cognitive dissonance: using our smartphones to read and worry about robots taking over our jobs, whilst wishing for a shorter work week and more time for creative pursuits?

The British Academy recently brought together a panel of experts in robotics, economics, retail and sociology to talk about how technology is reshaping our working lives. This review summarises some of their thoughts on the situation now, and what developments lie ahead. Watch the full debate here.

Helen Dickinson OBE reported on the British Retail Consortium’s project, Retail 2020, a practical example of how technology is changing consumer behavior and affecting firms in her industry. The UK’s retail sector has on the one hand embraced technology and created a success story. The UK has the highest ecommerce spend per head in the developed world, with c15% of transactions taking place online, and at 3.0m employees it is also the largest private sector employer in the UK. However, beneath this, internet price comparison ushered in fierce price competition. Retailers are using technology to improve manufacturing and logistic efficiencies to control costs and offset shrinking profit margins. Physical stores are closing as sales migrate online. The BRC predicts a net 900,000 jobs will be lost by 2025. Nor will the expected impact be even: deprived regions are more reliant on retail employers and so will be more affected by job losses. Likewise, the most vulnerable, with less education or skills and looking for work in their local area, will be the hardest hit.

Prof Judy Wajcman resisted the urge to overly rejoice or despair at technological developments. For her, this revolution is not so different to the waves which have come before. It is impossible to predict what new needs, wants, skills and jobs will be created by technological advances. Undoubtedly some jobs will be eliminated, others changed, and some created. However, we can certainly think beyond the immediate like-for-like: a washing machine saves labour, but it has also changed our cultural sense of what it means to be clean. Critically, we should stop thinking of technology as any kind of neutral, inevitable, unstoppable force. All technology is manmade and political, reflecting the values, biases and cultures of those creating it. As Wajcman said, ‘if we can put a man on the moon, why are women still doing so much washing?’ In other words, female subjugation to domestic labour could have been eliminated by technology, but persistent cultural norms have prevented this from happening.

Dr Sabine Hauert is a self-professed technological optimist. For her technology has the potential to make us safer and empower us, for example by reducing road accidents, or allowing those who cannot currently drive to do so. Hauert sees a future not where robots completely replace humans, but where collaborative robots work alongside them to help with specific tasks. The crucial issue for dealing with this future lies in communication and education about new technologies, since the general public, mainly informed by news and cultural media, is ill-served by a steady drip of negative stories about our future with robots.

The short film Humans Need not Apply is one such alarming production, chiming with Dr Daniel Susskind’s altogether more gloomy view of the longer term effects of technological advances on the workforce. To date, manufacturing jobs have been those most affected by automation, but traditionally white collar jobs also contain many repetitive tasks and activities (just ask the employee drumming their fingers on the photocopier). Computing advances mean that many more of these are now in scope for automation, such as the Japanese insurer replacing some underwriters with artificial intelligence. For Susskind, it is not certain that workers will continue to benefit from increased efficiencies as technology advances. A human uses a satnav provided s/he is still needed to drive, but the same satnav could just as easily interface with a self-driving car, eliminating the need for any kind of human-machine interaction. Calling to mind the wholesale changes to UK heavy industry in the 1980s, any redeployment of labour will present huge challenges, and what work eventually remains may not be enough to keep large populations in well paid, stable employment.

Can humans benefit from robots in the workplace?The panel agreed that technological change will continue apace with wide reaching ramifications for our workplaces and our wider societies, but that it is our human qualities that will give us an advantage over machines. Perhaps this is the most pressing notion: we urgently need to recalculate the value we place on tasks within society. Work where social skills, communciation, empathy, and personal interaction are prioritised (like teaching or nursing) may develop a value above that which is rewarded today.

If we smell such change coming, it is no wonder we are anxious. The panellists differed on the ability of our society to absorb and adapt to coming technological change, and the distribution of any net benefit or loss. So, is the only option to accept the inevitable and brace for the tsunami to hit? Well, no. We need to realise that ‘technology’ is not one vast, distant wave on the horizon, but a series of smaller ripples already lapping higher around our ankles. Returning to Wajcman’s point, all technologies are created by people. If innovation has a cultural dimension, it can be influenced, so we must take heart and believe in our ability to effect change.

The further we can work to democratise and widen the pool of creative engineers, developers, artists, designers and critical thinkers contributing to the development of technologies, the broader the spectrum of resulting applications and consequent benefits to society as a whole. We can be conscious in our choices as consumers as we adopt new products and services into our lives, and challenge the new social norms emerging around work and life as technology allows us to blur the boundaries between them. And finally, we need to consider who profits, and who doesn’t, from new business models. We should lobby government to be deliberate in designing policy that looks to these future developments, and their likely unequal impacts across regions, industries and populations, to ensure that existing social inequalities are not entrenched or magnified. Hopefully the creative community can help steer this wave in the right direction, painting a vivid picture of our possible futures, to persuade the powerful to act in the interests of the greater good.

Helen Dickinson OBE, Chief Executive, British Retail Consortium

Dr Sabine Hauert, Lecturer in Robotics, University of Bristol

Dr Daniel Susskind, Fellow in Economics, University of Oxford and co-author of The future of the professions: How technology will transform the work of human experts (OUP, 2015)

Professor Judy Wajcman FBA, Anthony Giddens Professor of Sociology, LSE and author Pressed for time: The acceleration of life in digital capitalism (Chicago, 2015)

Timandra Harkness, Journalist and author, Big Data: Does size matter? (Bloomsbury Sigma, 2016)

Katharine Dwyer is an artist who considers the modern corporate workplace in her practice.

Since 2005, Inke Arns has been the curator and artist director of Hartware MedienKunstVerein, an institution focusing the cross-section between media and technology into forms of experimental and contemporary art. This year, she was the curator for the exhibition titled alien matter during transmediale festival’s thirty-year anniversary. I had the pleasure of meeting Inke and taking a leisurely stroll with her around the exhibition.

The interview is written as part of a late-night email exchange with Inke a couple of weeks following our initial meeting.

CS: How did the idea come about? In your introductory text you mention The Terminator. Were you truly watching Arnold when alien matter occurred to you as an exploratory concept?

IA: Haha, good question! No, seriously, this particular scene from Terminator 2 (1991) was sitting in the back of my head for years, maybe even decades. It’s the scene where the T-1000, a shape-shifting android, appears as the main (evil) antagonist of the T-800, played by Arnold Schwarzenegger. The T-1000 is composed of a mimetic polyalloy. His liquid metal body allows it to assume the form of other objects or people, typically terminated victims. It can use its ability to fit through narrow openings, morph its arms into bladed weapons, or change its surface colour and texture to convincingly imitate non-metallic materials. It is capable of accurately mimicking voices as well, including the ability to extrapolate a relatively small voice sample in order to generate a wider array of words or inflections as required.

The T-1000 is effectively impervious to mechanical damage: If any body part is detached, the part turns into liquid form and simply flows back into the T-1000’s body from a far range, up to 9 miles. Somehow, the strange material of the T-1000 was teaming up with Jean-Francois Lyotard’s notion of “Les Immatériaux” (1985). Lyotard tried to describe new kinds of matter, that at first sight look like something that we know of old, but in fact are materials that have been taken apart and re-assembled and therefore come to us with radically new qualities. It is essentially alien matter which Lyotard was describing.

CS: You also comment on intelligent liquid and then make reference to four subcategories for the ‘rise of new object cultures’: AI, Plastic, Infrastructure, and the Internet of Things. Is this what makes up ‘alien matter’ to you? Inorganic materials? Simultaneously, HTF The Gardener and Hard Body Trade explicitly and dominantly utilise nature.

IA: Well, the shape shifting intelligent liquid acts more like a metaphor. It is a metaphor for the fact that the clear division between active subjects and passive objects is becoming more and more blurred. Today, we are increasingly faced with active objects, with things that are acting for us. The German philosopher Günther Anders, yet another inspiration for alien matter, described in his seminal book The Obsolescence of Man (Die Antiquiertheit des Menschen) how machines – or computers – are “coming down”, how over time they have come to look less and less like machines, and how they are becoming part of the ‘background’. Or, if you wish, how they have become environment. That’s what I tried to capture in these four subcategories AI, Internet of Things, Infrastructure and Plastic. It is subcategories that reflect our contemporary situation, and at the same time are future obsolete. All of this is becoming part of the big machine that is becoming visible on the horizon. The description that Anders uses is eerily up to date.

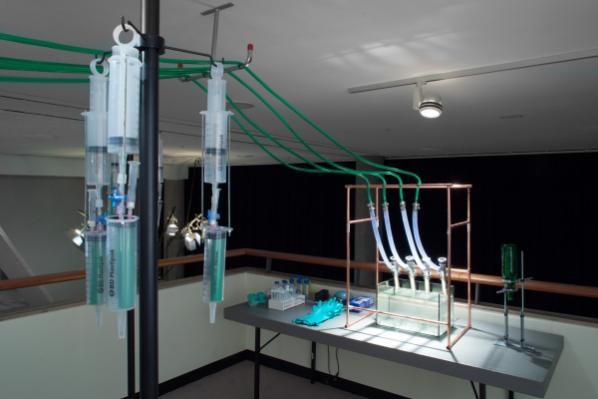

Is this alien matter inorganic? Well, yes and no. It is primarily something inorganic as plastic could be described as one of the earliest alien matters – its qualities, like, e.g., its lifespan, are radically different from human qualities. However, it is something that increasingly merges with organic matter – Alien in Green showed this in their workshop that dealt with the xeno-hormones released by plastic and how they can be found in our own bodies. They did this by analyzing the participants’ urine samples in which they found stuff that was profoundly alien.

In the exhibition, everything is highly artificial, even if it looks like nature, like in Ignas Krunglevicius’ video Hard Body Trade or Suzanne Treister’s series of drawings/prints HFT The Gardener. The ‘natural’ is becoming increasingly polluted by potentially intelligent xeno-matter. We are advancing into murky waters.

CS: There is no use of walls in the exhibition, other than Video Palace, standing as a monumental structure made out of VHS tapes. Why did you decide to exclude setting up rooms or walls for alien matter?

IA: I knew right from the beginning that I wanted to keep the space as open as possible. Anything you build into this specific space will look kind of awkward. This is also how I make exhibitions in general: Keeping the exhibition space as open as possible, building as few separate spaces as possible in order to allow for dialogues to happen between the individual works. For alien matter we worked with raumlaborberlin, an architectural office that is known for its unusual and experimental spatial solutions and that has been working with transmediale for quite some time now. I have worked with them for the first time and I am super happy with the result. We met several times during the development process, and raumlabor proposed these amazing tripods you can see in the show. They serve as support for screens and the lighting system. (Almost) nothing is attached to the walls or the ceiling. raumlabor were very inspired by the aliens in H.G. Wells’ War of the Worlds – where the extraterrestrials are depicted with three legs and a gigantic head. Even if the show is not about aliens I really liked the idea and the appearance of these tripods. They look at the same time elegant, strange, and through their sheer size they are also a bit awe-inspiring. Strange elegant aliens so to speak to whom we have to look up in order to see. At the same time they are ‘caring’ for the exhibition, almost as if they were making sure that everything is running smoothly.

CS: What can you tell me about the narrative behind Johannes Paul Raether’s Protekto.x.x. 5.5.5.1.pcp.? You mentioned that it was originally a performance in the Apple Store, nearly branding the artist a terrorist.

IA: Correct. The figure central to the installation is one of the many fictional identities of artist Johannes Paul Raether, Protektorama. It investigates people’s obsession with their smartphones, explores portable computer systems as body prosthetics, and addresses the materiality, manufacturing, and mines of information technologies. Protektorama became known to a wider audience in July 2016 when a performance in Berlin, in which gallium—a harmless metal—was liquefied in an Apple store, led to a police operation at Kurfürstendamm. In contrast to the shrill tabloid coverage, the performative work of the witch is based on complex research and visualizations, presented here for the first time in the form of a sculptural ensemble including original audio tracks from the performance. The figure of Protektorama stems from Raether’s cyclical performance system Systema identitekturae (Identitecture), which he has been developing since 2009.

CS: Throughout the exhibition there is an awareness that technological singularity can and possibly will overcome the human body and condition. In the context of the exhibition, do you think that we may be accelerating towards technological and machinic singularity? As humans, are we already mourning the future?

IA: The technological singularity is a trans-humanist figure of thought that is currently being propagated by the mathematician Vernor Vinge and the author, inventor and Google employee Ray Kurzweil. This is understood as a point in time, and here I resort to Wikipedia, “at which machines rapidly improve themselves by way of artificial intelligence (AI) and thus accelerate technical progress in such a way that the future of humanity beyond this event is no longer predictable.” The next question you are probably going to ask is whether I believe in the singularity.

CS: Do you?

IA: Whether I believe in it? (laughs) The singularity is in fact a kind of almost theological figure. Technology and theology are very close to one another in a sense. The famous American science fiction author Arthur C. Clarke once said that any sufficiently developed technology can’t be differentiated from magic. I consider the singularity to be an interesting speculative figure of thought. Assuming the development of technology were to continue on its course as rapidly as it has to date, and Moore’s Law (stating that computing performance of computer chips doubles every 12-24 months) retained its validity, what would then be possible in 30 years? Could it really come to this tipping point of the singularity in which pure quantity is transformed into quality? I don’t know. What is interesting right now is that instead of the singularity, we are faced with something that the technology anthropologist Justin Pickart calls the ‘crapularity’: “3D printing + spam + micropayments = tribbles that you get billed for, as it replicates wildly out of control. 90% of everything is rubbish, and it’s all in your spare room – or someone else’s spare room, which you’re forced to rent through AirBnB.” I also suggest to check out the ‘Internet of Shit’ Twitter feed.

CS: You come from a literary background. Noticing the selection and curation of alien matter, it becomes clear that you love working with narratives. Do you feel as though your approach of combining narrative and speculative imaginations is fruitful and rewarding?

IA: I do (if I didn’t I wouldn’t do it). I think narrative – or: storytelling – and speculative imaginations are powerful tools of art. They allow us to see the world from a different perspective. One that is not necessarily ours, or that is maybe improbable or unthinkable today. The Russian Formalists called this (literary) procedure ‘estrangement’ (this was ten years before Bertolt Brecht with his ‘estrangement effect’). Storytelling and/or speculative imaginations help us grasping things that might be difficult to access from our or from today’s perspective. It’s like an interface into the unknown. Maybe you can compare it to learning a foreign language – it greatly helps you to understand your own native language.

CS: On a final note, I’d like to revisit a conversation we had during transmediale’s opening weekend. We spoke about a potential dichotomy or contention between the discourse followed by transmediale and that of the contemporary art world, using the review by The Guardian about the Berlin Biennial as an example. Beautifully written, albeit you seemed to disagree with some points made – particularly at the notion enforced by the writer that works shown there, similar in nature to the works in alien matter, are not ‘art’. Could you elaborate on your thoughts?

IA: You are mixing up several things – let me try to disentangle them. I was referring to the article “Welcome to the LOLhouse” published in The Guardian. The article was especially critical of the supposed cynicism and sarcasm it detected in the Berlin Biennale curators’ and most of the artists’ approaches. Well, what was true for Berlin Biennale was the fact that it showed many younger artists from the field of what some people call ‘post-Internet’ art. This generation of artists – the ‘digital natives’ – mostly grew up with digital media. And one of the realities of the all pervasive digital media is the predominance of surfaces. The generation of artists presented at the Berlin Biennale dealt a lot with these surfaces. In that sense it was a very timely and at the same time a cold reflection of the realities we are constantly faced with. I felt as if the artists held up a mirror in which today’s pervasiveness of shiny surfaces was reflected. It could be interpreted as sarcasm or cynicism – I would rather call it a realistic reflection of contemporary realities. And it was not necessarily nice what we could see in this mirror. But I liked it exactly because of this unresolved ambivalence.

About transmediale and the contemporary art world: These are in fact two worlds that merge or mix very rarely. I have often heard from people deeply involved in the field of contemporary art (even some friends of mine) that they are not interested in transmediale and/or that they would never attend the festival or go and see the exhibition. And vice versa. This is mainly due to the fact that the art people think that transmediale is too nerdy, it’s for the tech geeks (there is some truth in this), and the transmediale people are not interested in the contemporary art world as they deem it superficial (there is some truth in this as well). For my part, I am not interested in preaching to the converted. That’s why I included a lot of artists in the show that have never exhibited at transmediale before (like Joep van Liefland, Suzanne Treister, Johannes Paul Raether, Mark Leckey). However, albeit the borders, the fields have become increasingly blurred. It is also visible that what is coming more from a transmediale (or ‘media art’) context clearly displays a greater interest in the (politics of) infrastructures that are covered by the ever shiny surfaces (that bring along their own but different politics).

I could continue but I’d rather stop, as it is Monday morning, 3:01 am.

You can also read a review of alien matter, available here.

alien matter is on display until the 5th of March, in conjunction with the closing weekend of trasmediale. Don’t snooze on the last chance to see it!

All in-text images are courtesy of Luca Girardini, 2017 (CC NC-SA 4.0)

Main image is a still from the movie The Terminator 2 (1991)

Within the context of transmediale’s thirty-year anniversary, Inke Arns curates an exhibition titled alien matter. Housed in Haus der Kulturen der Welt, alien matter is a stand-alone product that has been worked on for more than a year, featuring thirty artists from Berlin and beyond. In the introductory text, Arns utilises her background in literature and borrows a quote from J.G. Ballard, an English novelist associated with New Wave science fiction and post apocalyptic stories. The quote reads:

The only truly alien planet is Earth. – J.G. Ballard in his essay Which Way to Inner Space?

Ballard was redefining the notion of space as ‘outer space’, seemingly beyond the Earth, and ‘inner space’ as the matter constituting the planet we live on. For him, the idea of outer space is irrelevant if we do not fully understand the components of our inner space, claiming, ‘It is inner space, not outer, that needs to be explored’. The ever increasing and accelerating modes of infrastructural and therefore environmental change caused by humans on our Earth is immense. Arns searches for the ways by which this form of change has contributed to the making of alien matter on a planet we consider secure, familiar and essentially, our home. In the age where technological advancements are so severe that machines are taking over human labour, singularity is a predominant theme whilst the human condition is reaching a deadlock in more ways than we can predict. The works shown in alien matter respond to this deadlock by shedding their status as mere objects of utility and evolve into autonomous agents, thus posing the question, ‘where does agency lie?’

Entering the space possessing alien matter, one is immediately confronted with a giant wall – not one like Trump’s, but instead a structure made out of approximately 20,000 obsolescent VHS tapes on wooden shelves. It is Joep van Liefland’s Video Palace #44, hollowed inside with a green glow coming from within at its entry point. The audience has the opportunity to enter the palace and be encapsulated within its plastic and green fluorescent walls, reminiscent perhaps of old video rental stores with an added touch of neon. The massive sculpture acts as an archaeological monument. It highlights one of Arns’ allocated subcategories encompassing alien matter, (The Outdateness of) Plastic(s); the rest are as follows: (The Outdatedness of) Artificial Intelligence, (The Outdatedness of) Infastructure and (The Outdatedness of) Internet(s) of Things.

Part of Plastic(s) is Morehshin Allahyari and Daniel Rourke’s project titled The 3D Additivist Cookbook, initially making its conceptual debut at last year’s transmediale festival. In collaboration with Ami Drach, Dov Ganchrow, Joey Holder and Kuang-Yi Ku, the Cookbook examines 3D printing as possessing innovative capabilities to further the functions of human activities in a post-human age. The 3D printer is no longer just an object for realising speculative ideas, but instead is manifested as a means of creating items that may initially (and currently) be considered alien for human utility. Kuang-Yi Ku’s contribution, The Fellatio Modification Project, for example, applies biological techniques of dentistry through 3D printing in order to enhance sexual pleasure. Through the 3D Additivist Cookbook, plastic is transformed into a material with infinite possibilities, in which may also be considered as alien because of their human unfamiliarity.

Alien and unfamiliarity is also prevalent when noticing the approach by which the works are laid out and lit throughout the exhibition. Without taking Video Palace #44 into consideration, the exhibiting space is void of walls and rooms. Instead, what we witness are erect structures, or tripods, clasping screens and lights. These architectural constructions are, as Arns points out in the interview we conducted, reminiscent of the extraterrestrial tripods invading the Earth in H.G. Wells’ science fiction novel, The War of the Worlds; initially illustrated by Warwick Goble in 1898. The perception of alien matter is enriched through this witty application of these technical requirements as audiences wander amongst unknown fabrications.

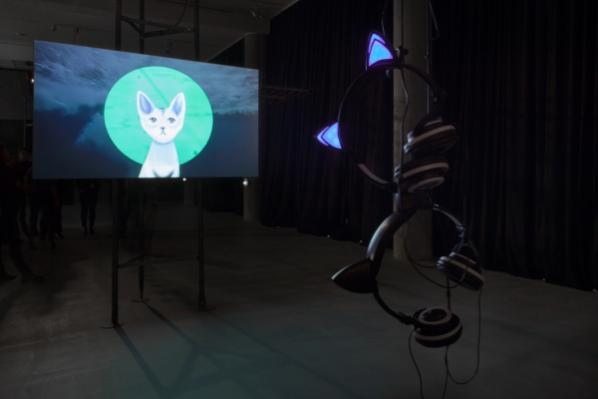

Amidst and through these alien structures, screens become manifestations for expressive AIs. Pinar Yoldas’ Artificial Intelligence for Governance, the Kitty AI envisages the world in the near future, 2039. Now, in the year 2017, Kitty AI appears to the viewer as a slightly humorous political statement, however, much of what Kitty is saying may not be far from speculation. Kitty AI appears in the form of rudimentary and aged video graphics of a cute kitten, possibly to not alarm humans with its words. It speaks against paralysed politicians, extrapolates on overloaded infrastructures of human settlement, the on-going refugee crisis still happening in 2039 but to larger dimensions and… love.

The Kitty AI is ‘running our lives, controlling all the systems it learns for us’, providing us with a politician-free zone and states that it ‘can love up to three million people at the time’ and that it ‘cares and cares about you’. Kitty AI has evolved and possesses the capacity to fulfil our most base desires and needs – solutions to problems in which human are intrinsically the cause of. Kitty AI is a perfect example when taking into consideration Paul Virilio’s theory in his book A Landscape of Events, stating:

And so we went from the metempsychosis of the evolutionary monkey to the embodiment of a human mind in an android; why not move on after that to those evolving machines whose rituals could be jolted into action by their own energy potential. – Paul Virilio in his book A Landscape of Events

Virilio doesn’t necessarily condemn the evolution of AIs; humans had the equal opportunity to progress throughout the years. Instead his concerns rise from worries that this evolution is unpredictably diminishing human agency. The starting stage for this loss of agency would be the fabrication of algorithms having the ability to speculate possible scenarios or futures. Such is the work of Nicolas Maigret and Maria Roszkowska titled Predictive Art Bot. Almost nonsensical and increasingly witty, the Predictive Art Robot borrows headlines from global market developments, purchasing behaviour, phrases from websites containing articles about digital art and hacktivism, and sometimes even crimes to create its own hypothetical, yet conceivable, storyboards. The interchange of concepts rangings from economics, to ecologies, to art, transhumanism and even medicine, pertain subjects like ‘tactical self-driving cars’ and ‘radical pranks’ for disruption and ‘political drones’ and even ‘hardcore websites perverting the female entity’.

To a certain degree, both Kitty AI and Art Predictive Bot could be seen as radical statements regarding the future of human agency, particularly in politics. There is always an underline danger regarding fading human agency and its importance for both these works and imagined scenarios – particularly when taking into consideration Sascha Pohflepp’s Recursion.

Recursion, acted by Erika Ostrander,is an attempt by an AI to speak about human ideas coming from Wikipedia, songs by The Beatles and Joni Mitchell, and even philosophy by Hegel, regarding ‘self-consciousness’, ‘sexual consciousness’, the ‘good form of the economy’, and ‘the reality of social contract’. Ostrander’s performance of the piece is almost uncanny to how we might expect AIs to understand and read through language regarding these subjects. The AI has been programmed to compose a text from these readings starting with the word ‘human’ – the result is a computer which passes a Turing test, almost mimetic of what in its own eyes is considered an ‘other’ in which we can understand that simulacra gains dialectal power as the slippage becomes mutual. Simultaneously, these words are performed by a seemingly human entity, posing the question of have we been aliens within all along without self-conscious awareness?

Throughout alien matter it becomes gradually apparent that the reason why AIs are problematic to agency is because of their ability to imitate or even be connected to a natural entity. In Ignas Krunglevičius’ video, Hard Body Trade, we are encapsulated by panoramic landscapes of mountains complimented by soothing chords and a dynamic sub-bass as a soundtrack. The AI speaks over it ‘we are sending you a message in real time’ for us to be afraid, as they are ‘the brand new’ and ‘wear masks just like you’ implying they now emulate human personas. The time-lapse continues and the AI echoes, ‘we are replacing things with math while your ideas and building in your body like fat’ – are humans reaching a point of finitude in a landscape whereby everything moves much faster than ourselves?